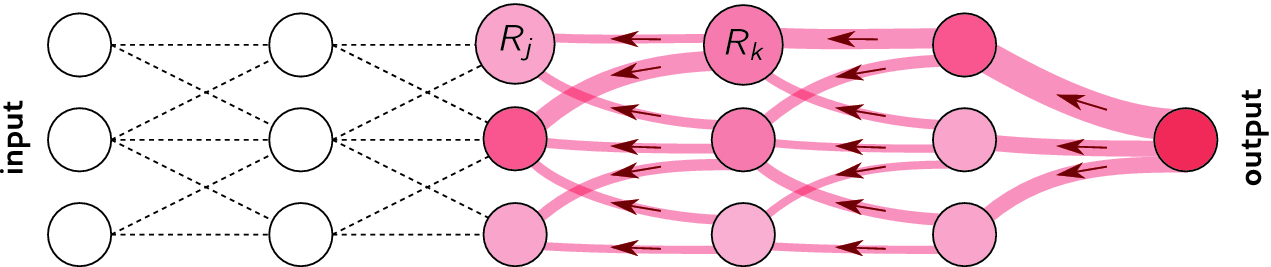

Layer-wise

relevance propagation is a method for understanding deep neural networks that

uses a particular design path to observe how the individual layers of the

program work.

These

types of techniques help engineers to learn more about how neural networks do

what they do, and they are crucial in combating the problem of "black box

operation" in artificial intelligence, where technologies become so

powerful and complex that it's hard for humans to understand how they produce

results.

Specifically,

experts contrast layer-wise relevance propagation with a deepLIFT model which

uses backpropagation to examine activation differences between artificial

neurons in various layers of the deep network. Some describe layer-wise

relevance propagation as a deepLIFT method that sets all reference activations

of artificial neurons to the same baseline for analysis.

Techniques

like layer-wise relevance propagation, deepLIFT and LIME can be attached to

Shapley regression and sampling techniques and other processes that work to

provide additional insight into machine learning algorithms.

0 Comments