As an IT or system administrator, is it one of the phrases we do not want to hear from customers or users – the server is down. Server downtime can lead to tremendous costs for businesses that rely on technology solutions more than ever. Data and the information in the data drive most businesses that now have a strong web presence, even performing most of their sales and other activities online. Preventing downtime of business-critical servers is an absolute must for today’s technology-driven organizations, including most. In this post, we will identify and discuss 5 server downtime prevention methods to help businesses prevent downtime of business-critical server workloads.

Preventing server downtime in 5 ways for critical server workloads

Like many challenging problems, the solution involves a multi-layered solution that businesses must implement to ensure they are not relying on one solution or another to prevent server downtime. In any preventative or high-availability solution, you do not want to have all your “eggs in one basket.” It means you want to have multiple layers of protection and resiliency. What are those? Let’s look at the following 5 server downtime prevention methods:

- Backups and disaster recovery

- High-availability

- Monitoring and alerting

- Updates and security patches

- Multiple Network paths and geolocations

1. Backups and disaster recovery

When looking at preventing server downtime, we would be remiss to leave out the importance of backups and disaster recovery. You may be wondering, aren’t backups and disaster recovery for when you have server downtime due to any number of reasons? Yes, it is true. Generally, some disruption (downtime) leads to recovering resources from a backup. So it doesn’t prevent it altogether.

However, the discussion centers around server downtime. Indeed, without backups, server downtime due to a disaster could exponentially exceed downtime events where backups and a solid disaster recovery plan are in place. You want to make sure your data is protected, whether this is files, folders, virtual machines, applications, and other aspects of disaster recovery protection.

Well-built disaster recovery strategies are built on the 3-2-1 backup best practice strategy. With 3-2-1 backups, you want to have at least (3) copies of your data stored on at least (2) types of media, with at least (1) copy stored offsite. The whole premise of the 3-2-1 backup best practice is you are reducing the amount of risk for your data. You are spreading the risk out, so the likelihood you would lose all your data everywhere would be slim to none.That said, you want to use a data protection solution that aligns with the 3-2-1 backup best practice strategy and allows your organization to have multiple copies of its data with diversity in its storage. I have been using NAKIVO Backup & Replication in my home lab environment and in many production environments, and it has performed exceptionally well.

NAKIVO offers all the core features you want to see in a modern backup solution today, including:

- A wide range of hypervisors supported

- Immutable backups

- Cloud support – Cloud workloads and backup repositories

- 2FA

- Cloud Software-as-a-Service support

- Site Recovery with Failover and Failback orchestration

- Replication

- Copy jobs for creating an extra copy of your data elsewhere

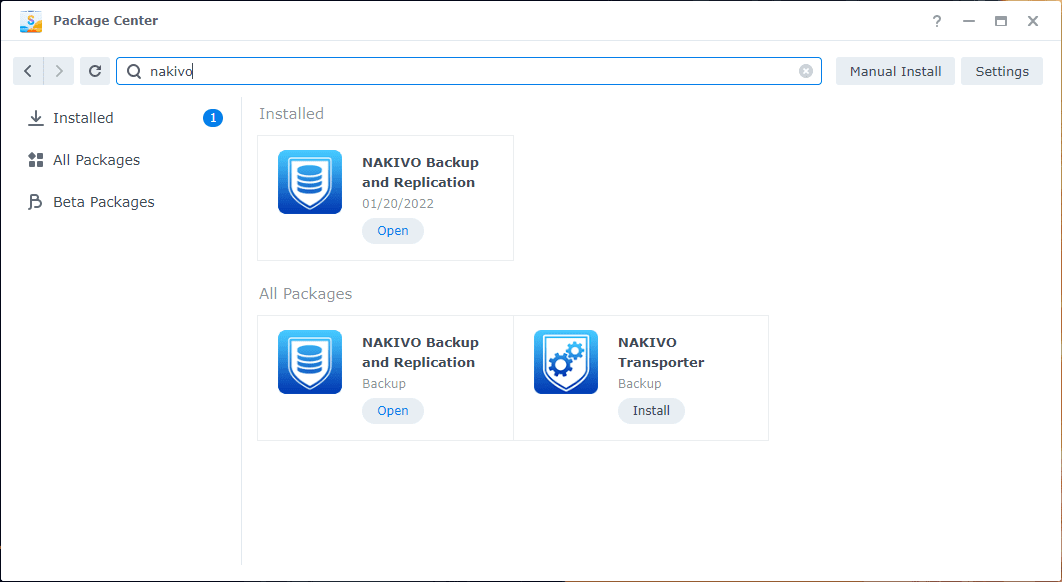

I am currently using NAKIVO in the home lab running on a Synology NAS as it easily runs on Synology as an app. NAKIVO is one of the few backup vendors offering this functionality. It allows running a self-contained backup appliance on a wide range of NAS vendors. Check out my post about that here:

You can learn more about NAKIVO and download a free trial version of NAKIOV Backup & Replication here:

2. High-availability

Another great way to prevent server downtime is to run your servers in a high-availability configuration. It means you don’t have a business-critical application running on a single server that can go down. Rather, you configure your servers in a high-availability cluster providing resiliency against hardware failures and other issues that may take a physical server down.

Modern hypervisors like VMware vSphere and Hyper-V provide high-availability built into the solution since virtual machines are protected against a host failure. If a virtual machine runs on a specific host that fails, it is simply restarted on a different host.

There is also application-level high availability that protects workloads at the application layer. Aside from running servers in a high-availability configuration, there is also the notion of application high-availability. It means you have a pool of servers that run a specific application. If one of the servers goes down, the other servers in the pool immediately pick up the application traffic with little disruption.

An excellent example of application high availability is a SQL Server Cluster running on top of Windows Server Failover Clustering (WSFC). The SQL server nodes in the cluster can all assume the active node in the cluster if one of the underlying cluster members fails.

In cloud environments, you may have an AWS Load Balancer in front of a group of EC2 instances. This ensures the traffic coming in is not relegated to a single EC2 instance in case the EC2 instance fails. It has multiple choices to target a series of servers to ensure downtime is minimized.When considering server downtime prevention methods, providing high availability solutions ensures you have more than one physical or virtual resource to service the requests in the environment.

3. Monitoring and alerting

Another key server downtime prevention method is monitoring and alerting. Often, when downtime is experienced, without proper monitoring and alerting, customers or end-users may report the issue before IT is aware. However, with proper monitoring and alerting in place, IT operations and others can be alerted as soon as a server goes down, helping to minimize the event by allowing quick triaging and proactive remediation efforts to get the server back online.

Proper monitoring and alerting can also help prevent downtime altogether by alerting to hardware that may not have failed entirely but is displaying errors indicative of an imminent failure, such as a hard drive that is showing lots of errors but still not in a failed state.

With proactive monitoring and alerting, these types of imminent hardware failures can be triaged and replaced before they lead to an all-out failure, leading to unexpected server downtime. When monitoring resources, there will be resources that are deemed critical with much tighter SLAs associated. Other resources may have less critical SLAs associated. Discovering and mapping out these resources across the environment and knowing which servers are tied to which applications are critical to configuring monitoring and alerting helps with server downtime prevention.

4. Updates and Security patches

Many consider applying updates counterintuitive to server uptime since it often may require a server need rebooted, which results in downtime, albeit scheduled downtime. So, organizations may forego critical updates. This is generally not a wise practice.

Updates, especially security updates, are critical software upgrades that help protect against software bugs, critical vulnerabilities, and issues that could potentially be exploited by an attacker looking for unpatched software and systems.Downtime due to a cyberattack can be one of the most expensive of any type of disaster due to the nature of the destruction caused by an attacker, especially with a ransomware attack. A large-scale attack may have as its origin a single unpatched server that provided the means for an attacker to infiltrate your network.

Applying updates regularly helps to plug security vulnerabilities and ensure your software applications run smoothly with the latest vendor-released patches and updates. In addition, if you implement the proper high availability in the environment, patching can be carried out easily, resulting in no downtime of the application as server resources can be taken down and patched as needed, even during business hours.

Keeping updates applied is an important server downtime prevention method that helps to keep applications running smoothly and securely in the environment.

5. Multiple network paths and geolocations

Network connectivity is critically important for your business-critical servers. Thinking about how you can boost the resiliency of your network connection to the Internet helps to ensure your customers and the hybrid workforce can connect to your business-critical server resources.

Due to the criticality of server infrastructure and the need to have customers and end-users hitting those resources around the clock, businesses need to build out high availability using multiple network paths and Internet providers and even spread out their critical servers across different geographic regions.

Using SD-WAN solutions helps aggregate multiple ISPs into a single “pipe” handled by the SD-WAN appliance. The IP address is not owned by any of the ISPs, but instead can be moved around as needed. Redundant network connectivity is a server downtime prevention method that is crucially important in designing enterprise data center architecture. After all, it does not matter how resilient you are inside the data center if your customers and end-users cannot connect to those resilient resources due to an Internet outage, fiber cut, or other events disrupting network connectivity.Server Downtime Prevention FAQs

- Why is server downtime prevention important? Today’s businesses are more reliant on digital technologies than ever before. Most businesses rely on data and information being available 24x7x365. Preventing downtime is critical important. Every minute and second of unexpected server downtime equals tremendous costs to businesses.

- How can you prevent server downtime? A multi-faceted solution is required. You need resiliency across various components of the modern data center, including network connectivity, servers, and applications. Backups are critically important to server downtime prevention since they allow recovery of data and services in the event of a disaster and must be considered when building out a business-continuity plan.

- What is high availability? High availability is the notion that you can withstand a failure and still have your data and services available. This can be accomplished in a number of ways, including server clusters, load balancing, and multiple network connections.

Wrapping Up

This post has covered 5 server downtime prevention methods and multiple areas that must be considered to ensure your data and services are resilient and highly available. Keep in mind the points mentioned: backups and disaster recovery, high availability, monitoring and alerting, updates and security patches, and multiple network paths and geolocations. By formulating a layered approach, you can help to bolster your server downtime prevention strategy.

0 Comments