As with all VMware environments with various solutions layered on top of the core vSphere infrastructure, there is an order of operations. This order of operations also applies to shutdown procedure for the SDDC when you are using vSphere with Tanzu. What is that order of operations? Let’s look at how to shut down vSphere with Tanzu and see how this is accomplished.

Shut down vSphere with Tanzu

In an SDDC deployed according to VMware Validated Design, in addition to the management domain you deploy a single workload domain. If you deployed a vSphere with Tanzu workload domain, you start shutting down the SDDC management components in the vSphere with Tanzu workload domain before shutting down the SDDC components in the management domain.

When shutdown in the proper order, you keep the components operational by maintaining the necessary infrastructure, networking, and management services before shutdown. Note the following table below, taken from the official KB from VMware on the Shutdown order for a vSphere with Tanzu cluster.

| SHUTDOWN ORDER | SDDC COMPONENT |

|---|---|

| 1 | vCenter Server |

| 2 | Supervisor Control Plane virtual machines |

| 3 | Tanzu Kubernetes Cluster control plane virtual machines |

| 4 | Tanzu Kubernetes Cluster worker virtual machines |

| 5 | Harbor virtual machines |

| 6 | vSphere Cluster Services virtual machines |

| 7 | NSX-T Edge nodes |

| 8 | NSX-T Managers |

| 9 | vSAN and ESXi hosts |

As a disclaimer, the following was tested in my home lab environment and not a production environment. If you have any questions regarding specific guidance as recommended by VMware, be sure to place a support request for official guidance and support.

Shutdown the Kubernetes services on the vCenter Server

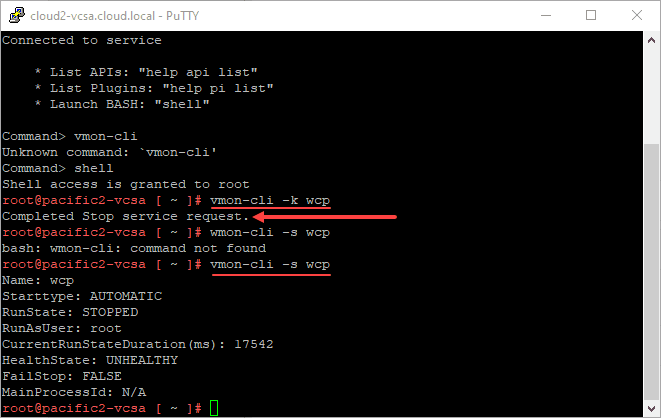

To begin with, you shutdown the vSphere with Tanzu workload domain components. To do this, you stop the Kubernetes services on the vCenter Server. Login into your vCenter Server and stop the Kubernetes services by running the command:

vmon-cli -k wcpAfter the services stop, you can check the services.

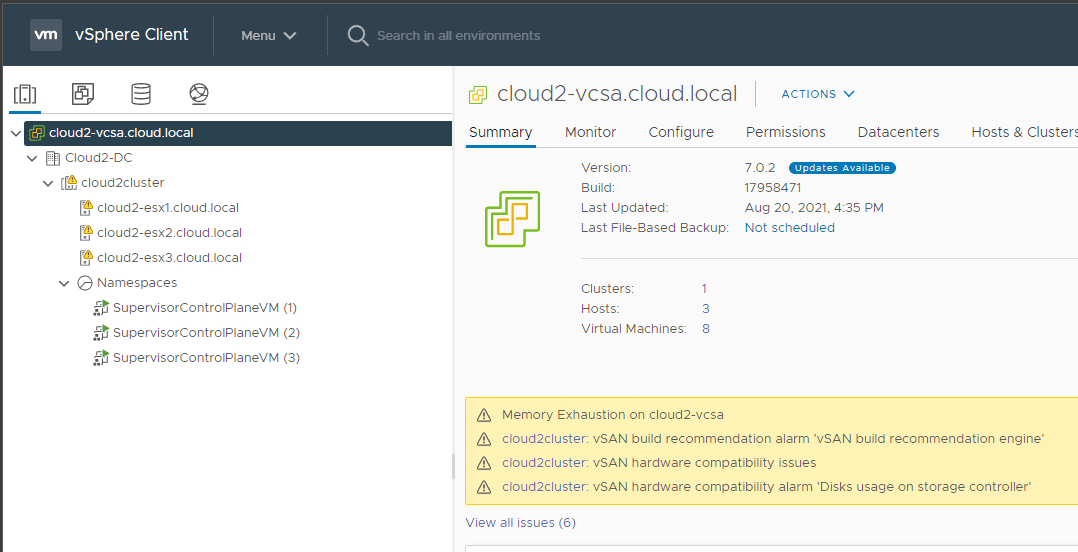

vmon-cli -s wcpOnce you stop the Kubernetes services, your visibility in the vSphere Client to the TKC namespaces goes away:

Also, if you navigate to the Workload Management section in your vSphere Client, you will see this error. This just means the Kubernetes services are stopped on the vCenter Server.

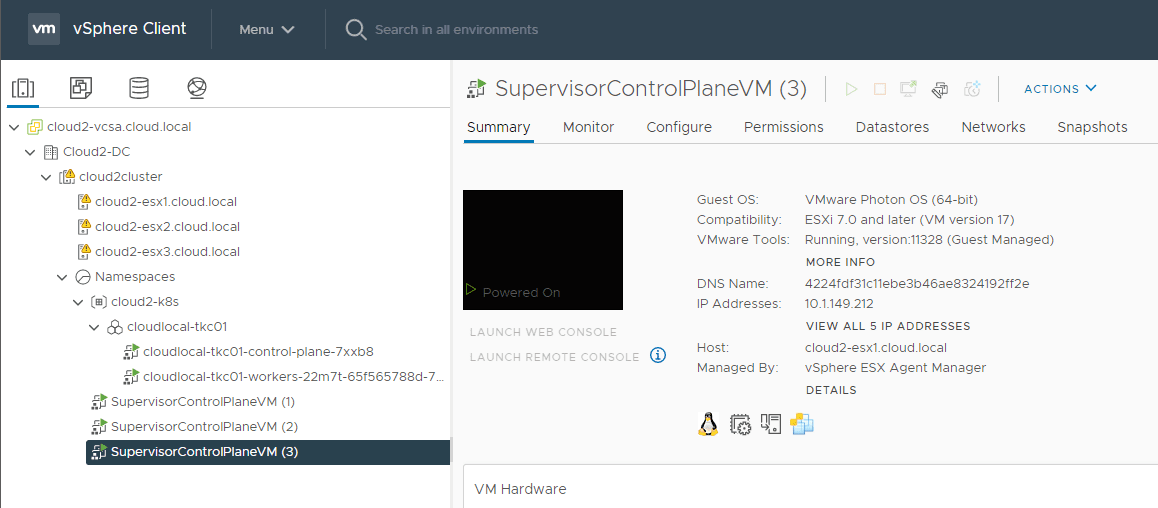

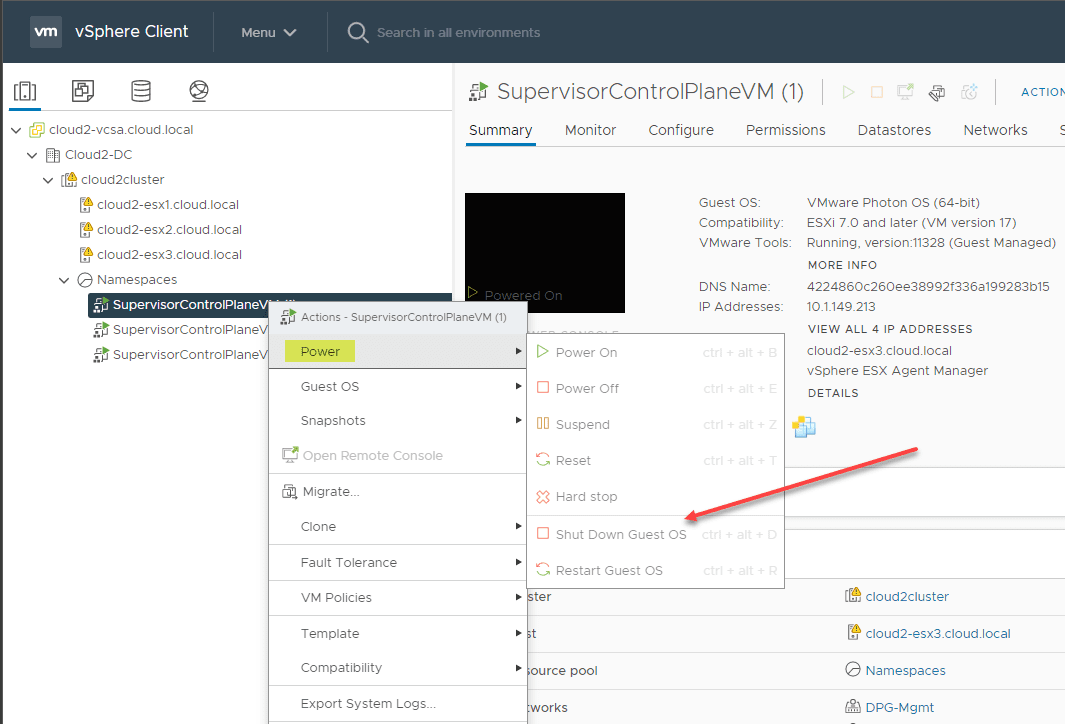

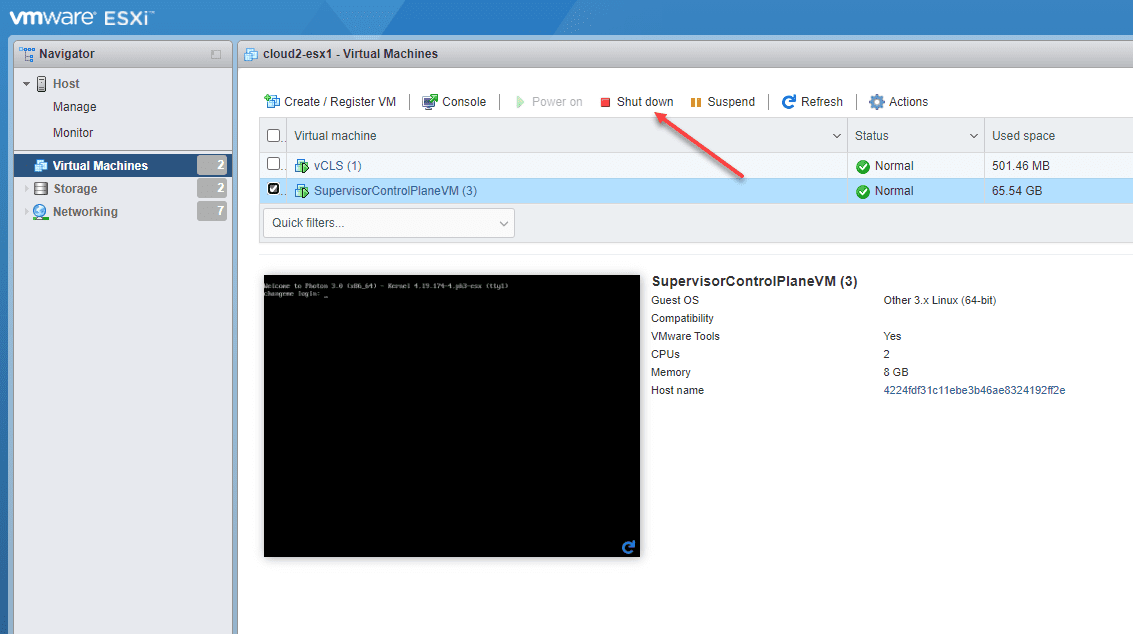

If you notice, you don’t have a shutdown option for the SupervisorControlPlane VMs that are running in inventory. For the Supervisor VMs and the workload VMs, we have to shut those down from the ESXi host directly.

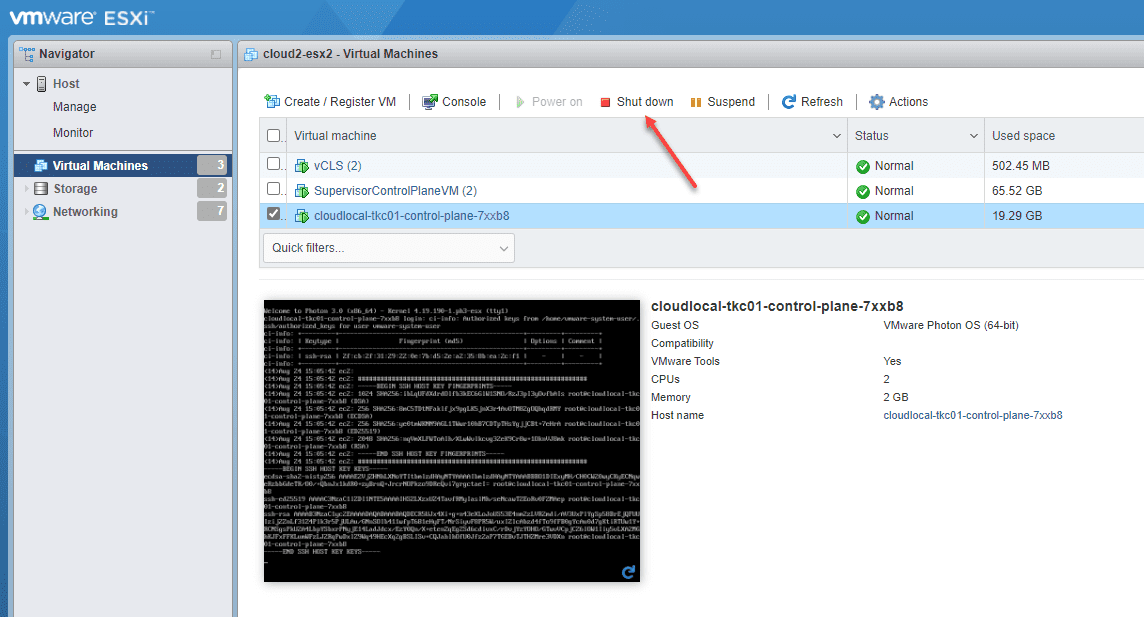

First, I am shutting down the tkc cluster control plane VMs.

Next, I shutdown the Supervisor control plane VM.

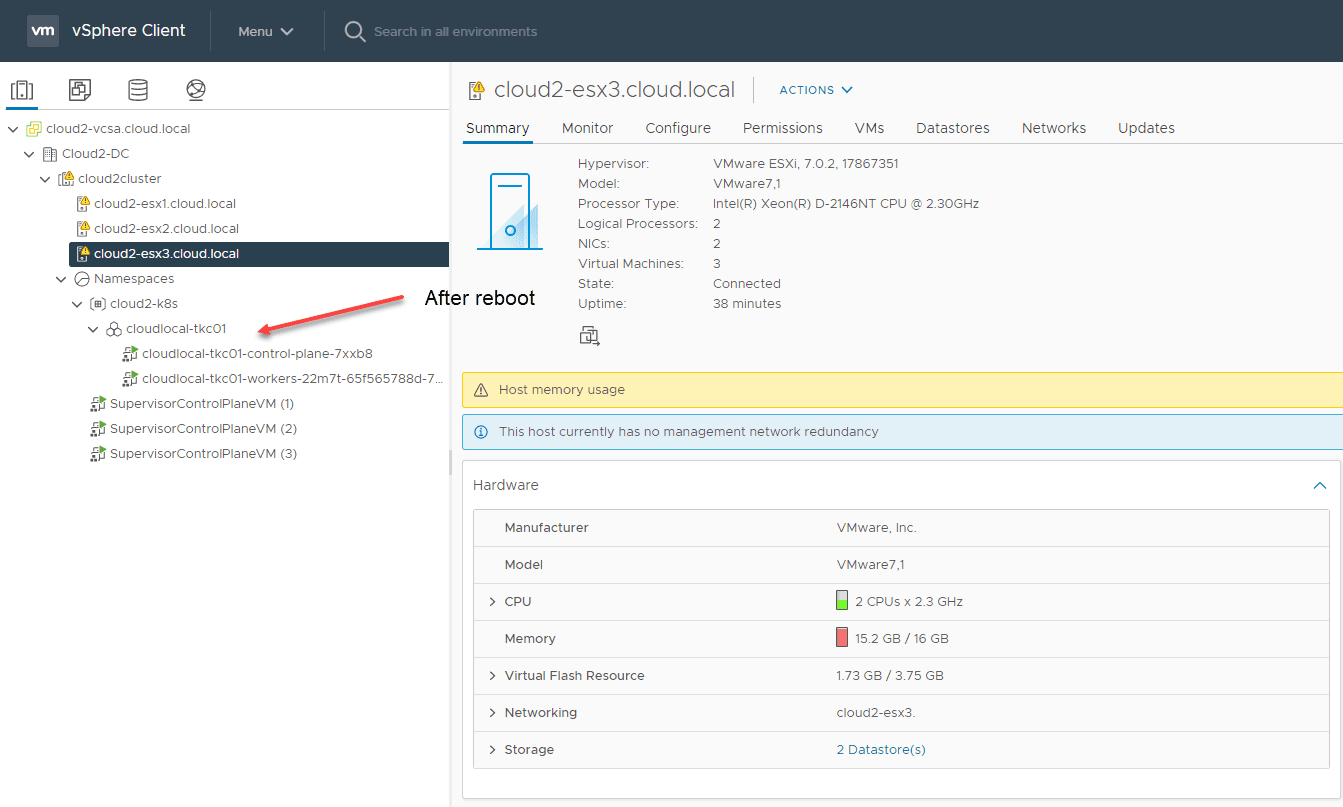

Next, shutdown or reboot your hosts for maintenance or other tasks. After bringing these back online, you should see your TKC services come back online without issue. Note below, everything is running once again.

Wrapping Up

Hopefully this walkthrough shows some of the steps needed to shutdown your TKC cluster you have running in the proper order. Note, there may be extra steps needed than what I have shown in the post. I am still playing around with TKC operations in the nested lab and will report any changes back to the post. Also, please comment if you have seen additional requirements or issues.

0 Comments